Scriptly Helps Pharmacies Identify Trends in Real Time with Reveal

Security with embedded analytics gets more complex when AI enters the picture. This article breaks down the key risks, offers best practices to prevent data leaks, and explains how Reveal gives SaaS teams full control over AI endpoints, data flow, and governance. If you're embedding AI-powered analytics into your product, Reveal helps you do it securely by design.

Executive Summary:

Key Takeaways:

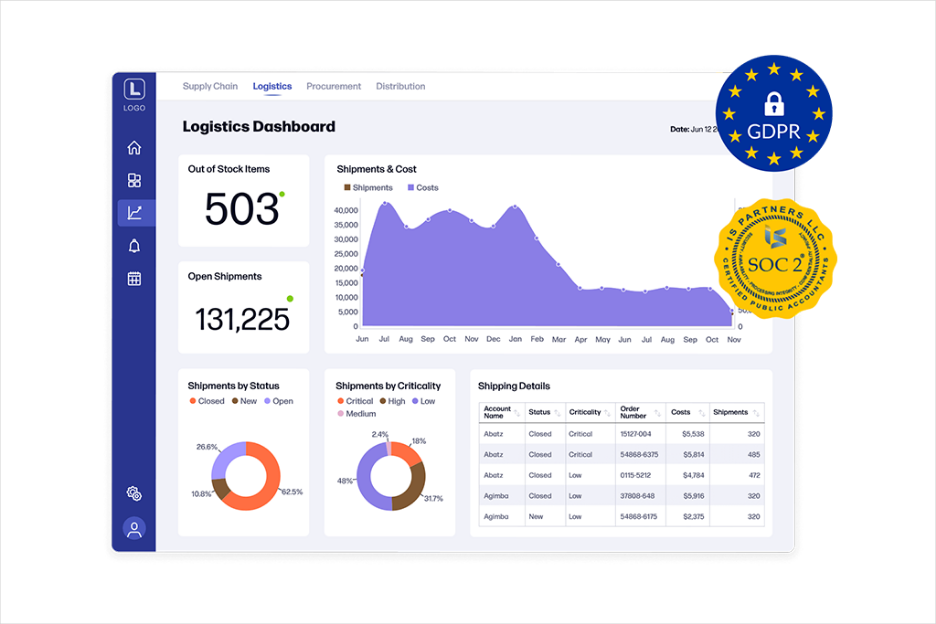

Security has always been a top priority for ISVs and SaaS platforms. But when teams implement embedded analytics from third-party providers, that priority turns into a pressure point.

Customer data moves beyond internal walls. Dashboards surface information to external users. And now, with AI in the mix, the risks increase. Teams must think about how data is accessed, processed, and exposed, especially when models are involved.

51% of tech leaders rank security as their top software development challenge for 2025, while 73% plan to expand their use of AI. This makes secure integration non-negotiable.

To help you navigate this pressing issue, we created this article sharing all the major challenges you may face while integrating AI-powered embedded analytics. You will learn the best practices to ensure every user’s safety, how to avoid data leaks through the new AI-powered embedded analytics layer, and how Reveal helps to ensure the security of our embedded analytics.

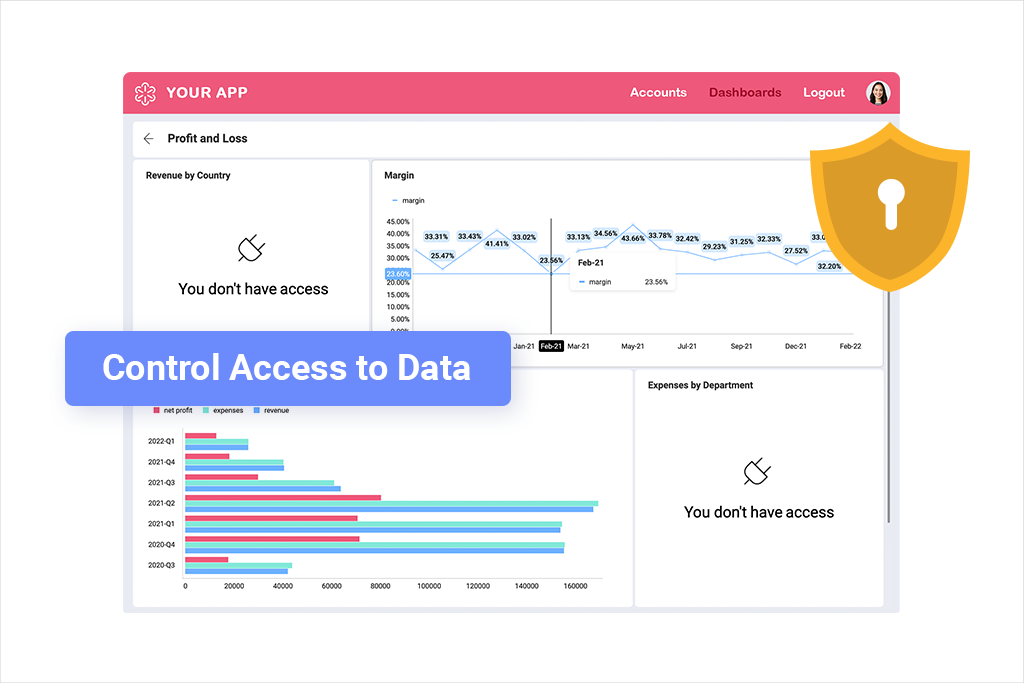

When you stop sending users to other platforms in search of insights, the security risks don’t go away. On the contrary, they multiply. When data moves from internal systems into user-facing dashboards, the architecture needs to work harder to keep it protected. This starts with access. Row-level security and role-based dashboards must stay accurate across every tenant, environment, and user type. A single misconfiguration could expose one customer’s data to another. That kind of error exposes customer data and violates compliance controls.

Authentication and authorization become more layered. Embedded components need to inherit existing identity rules, whether you’re using SSO, OAuth, or a custom provider. If those controls break down, users may gain access to views they were never meant to see. AI-powered features increase scrutiny. Even when models only process schema metadata or aggregated results, compliance leaders want proof. What data leaves the environment? Who manages the endpoint? What logs show it happened? Legacy BI tools rarely support full transparency across embedded workflows. Monitoring and alerts often cover app-level events, not analytics-level risk. That makes auditability hard to enforce. It also limits governance, especially when insights are delivered automatically or when users interact with dashboards in unpredictable ways. Security leaders need to see what the platform exposes and control every path data might take. Without that visibility, embedding analytics into a SaaS product can feel like opening the door to unmanaged risk.

AI-powered embedded analytics doesn’t have to weaken your security posture. SaaS teams and analytics vendors have developed clear rules to enforce security at every stage of integration.

Missing just one of these layers will not only ruin your credibility but may also lead to serious financial and legal consequences.

Security with embedded analytics starts at the integration layer.

Embedded dashboards need to generate logs like any other business-critical service.

Auditability is non-negotiable in regulated environments.

Security doesn’t have to get in the way of user experience. With white-label analytics, you can deliver clean, branded dashboards that still obey every access rule.

Securing embedded analytics is already complex. AI adds a new layer of risk. It introduces dynamic outputs, model endpoints, and unpredictable data flows, none of which are covered by traditional BI security. Without strict controls, AI-powered features can turn existing exposure points into active vulnerabilities.

So, when choosing an embedded analytics vendor, make sure their product ensures data leak prevention despite their AI layer. Here’s what to look for:

AI features should never receive raw data by default. Limit inputs to schema metadata, summaries, or pre-aggregated results. Avoid any model interaction that pulls sensitive records, especially across tenants.

Use strict scoping logic to define what fields or tables the model can access. Anything exposed to AI should be explicitly approved and not just inherited from a shared data source.

You need full control over the AI infrastructure. That means choosing the endpoint, configuring model behavior, and defining response handling.

Use customer-managed endpoints.

OpenAI, Azure, AWS, or private models. This avoids third-party routing you can’t audit or restrict. Your team should manage where data goes, what’s logged, and how insights return.

AI features should always be opt-in. Users must know when a model is running, what data it’s using, and how it’s influencing what they see.

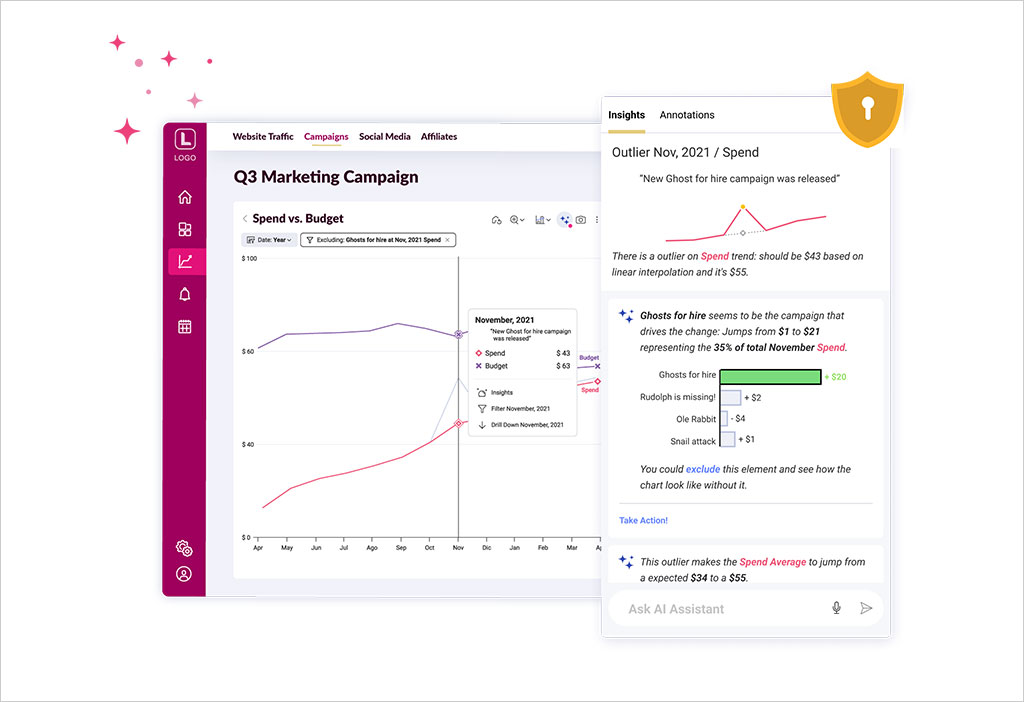

Log every AI interaction with metadata: user ID, query or prompt, model type, and timestamp. If something goes wrong, you need a full trail.

This also supports explainable AI. Auditors, product teams, and even customers need to understand how automated insights were generated.

What the model returns can be just as sensitive as what it received. Be cautious of contextual insights that could leak data indirectly—through over-personalization, naming patterns, or inferred metrics.

Legacy BI tools weren’t built for this level of dynamic interaction. They don’t provide endpoint control, prompt filtering, or AI-specific governance. They assume human-designed dashboards, not machine-generated responses.

You need new governance structures. That includes endpoint policies, feature flags, and model monitoring, alongside traditional audit and access control.

AI-powered analytics can unlock real value. But if you’re embedding it into a customer-facing product, you have to lock down every input, process, and output. That’s the only way to deliver secure automation without opening new attack surfaces.

The risks around AI-powered analytics are real—but they’re manageable with the right architecture. That’s why Reveal was built with control, transparency, and security at the center of every AI feature.

Reveal gives you full control over how AI interacts with your data. Nothing is enabled by default. Nothing routes through Reveal servers. And no model access happens unless you configure it.

Every AI capability in Reveal is off until you turn it on. Whether you’re building conversational interfaces or enabling automated insights, you decide when and how to introduce AI.

Your dev team configures everything through JSON and AI-specific APIs. There are no surprises, no hidden dependencies, and no backend processes running without your knowledge.

Reveal doesn’t own or operate any large language models. You choose which model to connect—OpenAI, Azure, AWS, a private endpoint, or even a small local model.

Reveal acts as the interface. You manage the engine. That means you define the endpoint, the behavior, and the compliance boundaries.

Reveal’s conversational AI only sends schema metadata like table names, column types, and relationships. The model never sees user queries, dashboards, or raw records.

If you use Reveal’s data agent API, only pre-aggregated, context-aware values are passed to the model. The result is secure embedded dashboards powered by AI, without exposing sensitive records.

Each AI interaction can be logged with user ID, model used, time of request, and output structure. This supports traceability and explainable AI for both product teams and compliance audits.

You always know who triggered what, and what the model returned.

This model works in sectors where most tools fail. For example, Sensato, a cybersecurity firm in the healthcare space, embedded Reveal to power analytics for hospital threat detection, without compromising HIPAA or patient privacy.

They needed AI-powered analytics that respected data boundaries. Reveal delivered that, with zero backend exposure and full customer control.

The pressure to embed AI into SaaS products is real. But speed without control is a liability. AI-powered analytics can’t be treated like any other feature. They require a different level of discipline—around access, data flow, and model behavior.

That’s why a security-first approach isn’t optional. It’s the only viable path forward for teams responsible for customer data, compliance, and platform integrity.

You need architecture that puts you in control. You need tooling that respects the rules you’ve already set. You need governance that works across dashboards, models, and real-time insight delivery.

Reveal was built for that.

From opt-in AI features and customer-managed endpoints to complete auditability and strict data boundaries, Reveal helps SaaS teams deliver AI-powered insights without sacrificing trust, speed, or compliance.

Ready to see how Reveal can help you embed secure, AI-powered analytics on your terms? Book a personalized demo.

Back to Top